A lightweight solution that allows amateur teams and players to use AI for event recording using their smartphones, providing an easy way to share and replay practice sessions.

- Assisted sports technology startup, NeuinX, in completing end-to-end user experience for their B2C product, from wireframing to delivering high-fidelity designs.

- Saving 20 recorders per game for a basketball game competition.

- Dedicated to combining computer vision with AI technology to significantly improve the accuracy of basketball game scoring and data collection, approaching nearly 100% (95%).

- Inform design decisions based on real user feedback during the interviews with sports players.

- Conduct user testing with 3 elementary schools(non-professional teams)and some professional basketball alliances(NDA).

- Iteration of the previous generation product based on user feedback (iPad Professional Player Edition

- Interviews with amateur players

- Analysis of similar products in the market

- Use Job-To-Be-Done to break down user tasks and plan core functionalities

- Transform the defined core functionalities into wireframes

- Create mockups and prototypes for expert interviews to gather feedback

- Conduct usability tests with the Alpha version

- Collect Think-aloud feedback from end-users using the Alpha version and task design for design optimization

- Feedback surveys

- Utilize HotJar to observe recordings and optimize the user experience

Introduction

NeuinX Play is a lightweight solution that allows amateur teams and players to use AI for event recording using their smartphones. It enables easy sharing and replay of practices.

In the past, there have been two pain points in the sports recording market.

Firstly, most professional games still rely on manual recording, such as the NBA, where a single game requires 20 recorders, incurring high personnel costs. Even with trained professionals, human errors are inevitable in the fast-paced game environment.

Secondly, while there are AI image recording systems on the market, they typically require camera setups, making them unaffordable for general colleges, high schools, or non-professional teams, aside from large events and professional teams.Although there is a variety of sports image recognition software available, most require high-resolution images for analysis, necessitating expensive hardware. This poses a significant cost challenge for non-professional teams such as high schools and universities.

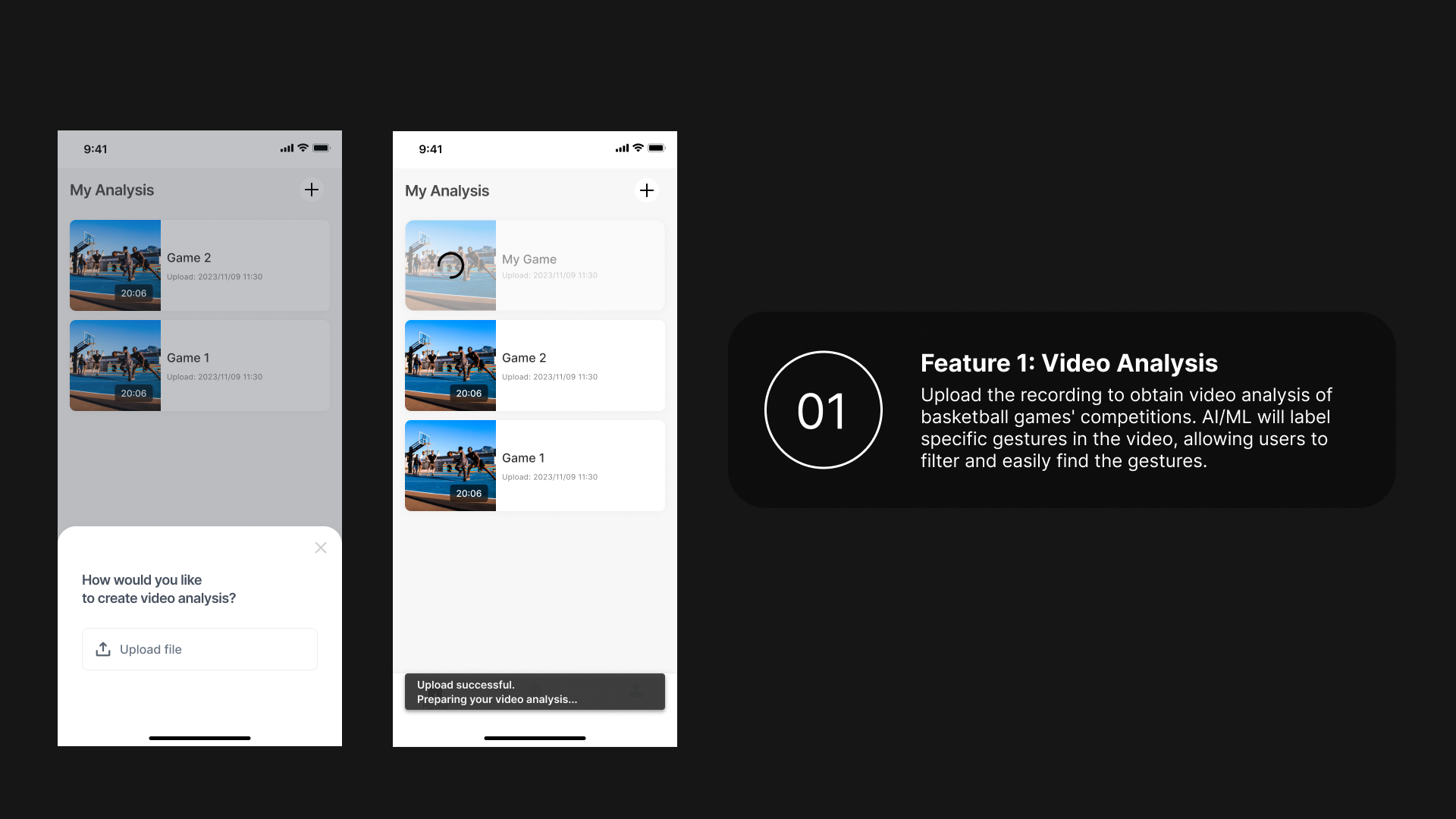

NeuinX Play addresses these challenges by utilizing smartphone footage for basketball event analysis. Teams can upload videos recorded on their phones to the NeuinX system after the game, swiftly documenting information such as shot attempts, slam dunks, layups, and scoring. This brings the value and enjoyment of professional-level event recording to amateur users.

In the previous generation of products, we collaborated with professional league teams and obtained user feedback, which served as the design basis for the first-generation amateur user product. Feedback from professional users provided us with a clear product positioning: tablets are suitable for professional users who require many options and high accuracy in AI-assisted annotations, while mobile phones are more suitable for addressing pain points during amateur practice.

After receiving feedback from professional teams, we conducted guerrilla testing and covert observational research with amateur users at event venues.

We discovered that professional production teams invested significant resources in capturing detailed shots and focusing on players on large screens, sometimes incorporating special effects to enhance audience engagement.

Random sampling and recording at event venues revealed that users most commonly used apps simultaneously for pre-game information lookup, capturing in-game highlights like three-point shots or slam dunks, quickly editing and sharing on social media during breaks, and post-game scanning of QR codes to obtain official data.

Surprisingly, one of the most exciting moments for amateur users throughout their journey was the strategic use of special effects to disrupt free throws, adding an unexpected layer of enjoyment.

We collected products with similar functionalities in the market and summarized their core features. In addition to annotation features directly related to the product, we included products from event-related platforms, as users expressed the need for data before watching games.Interestingly, amateur users, while watching games, not only utilized AI-generated annotations for reviewing certain actions, as anticipated, but also easily used social media apps to edit exciting game moments into Reels, sharing them on social media.

Editing proved to be a pain point for users, and as sharing contributes significantly to product promotion and memorability, audio-visual products related to sharing functionality were included in our comparison, serving as a reference for product design.

After watching a game, users immediately desired score data reports. This behavior, similar to the process of scanning and storing data into a user's app after an InBody body composition measurement, prompted us to include the scanning and data-sharing flow of the InBody body composition app as a reference.

After analyzing data from on-site visits and interviews, we employed the Job-to-be-Done framework to organize the stories of users from point A to B.

Once we identified user pain points, we utilized dot voting to categorize must-have and nice-to-have features for the MVP, determining the core functionalities to be released at different stages.

With the core functionalities determined, we created a series of user stories to manage requirements.

Wireframes were developed based on the organized user stories, serving as the foundation for generating task flows.

After finalizing the main color scheme and product prototype, we crafted an initial version for expert feedback.

Since annotation might not be a concept readily understood by casual users and lacking a demonstration of actual AI functionality, collecting feedback from amateur users through prototypes proved challenging.

Hence, we initially gathered expert feedback.

After the modifications from the first stage, we promptly delivered a cost-effective test version to the front-end development team.

In the feedback from usability testing, we identified that the original user flow was not conducive to users finding core functionalities.

Therefore, on the homepage, we enhanced the visual hierarchy of the add button. Additionally, due to the abundance of automatically annotated segments from real data, we extended the draggable area to enhance user-friendliness.

Upon the completion of the trial period, we immediately had users fill out a System Usability Scale (SUS) questionnaire to measure whether the product's usability met their needs.

Utilize HotJar to observe video recordings and optimize user experience.

In progress, data and impact are still being collected.

- Saving 20 recorders per game for a basketball game competition.

- Dedicated to create a joyful user-flow combining computer vision with AI technology to significantly improve the accuracy of basketball game scoring and data collection, approaching nearly 100% (95%).

- CES 2023 Innovation Awards